I'm Rob DiPietro, a PhD student in the Department of Computer Science at Johns Hopkins, where I'm advised by Gregory D. Hager. My research focuses on machine learning for complex time-series data, applied primarily to health care. For example, is it possible to learn meaningful representations of surgical motion without supervision? And can we use these representations to improve automated skill assessment and automated coaching during surgical training?

Recent News¶

Our paper on surgical activity recognition has been accepted as an oral presentation at MICCAI 2016:

R. DiPietro, C. Lea, A. Malpani, N. Ahmidi, S. Vedula, G.I. Lee, M.R. Lee, G.D. Hager: Recognizing Surgical Activities with Recurrent Neural Networks. Medical Image Computing and Computer Assisted Intervention (2016).

http://arxiv.org/abs/1606.06329

https://github.com/rdipietro/miccai-2016-surgical-activity-rec

Recent Projects¶

Unsupervised Learning for Surgical Motion¶

Here we learn meaningful representations of surgical motion, without supervision, by learning to predict the future. This is accomplished by combining an RNN encoder-decoder with mixture density networks to model the distribution over future motion given past motion.

The visualization shows 2-D dimensionality reductions (using t-SNE) of our obtained encodings, colored according to high-level activity, which we emphasize were not used during training. The 3 primary activities are suture throw (green), knot tying (orange), and grasp pull run suture (red), while the final activity, intermaneuver segment (blue), encompasses everything that occurs in between the primary activities.

(Under submission; link to paper and PyTorch code coming soon.)

RNNs for Extremely Long-Term Dependencies¶

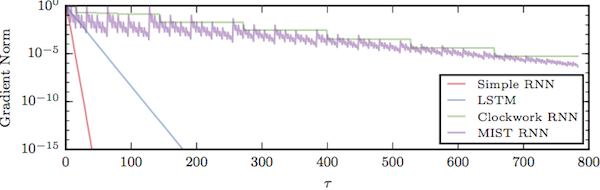

Here we develop mixed history recurrent neural networks (MIST RNNs), which use an attention mechanism over exponentially-spaced delays to the past in order to capture extremely long-term dependencies.

The visualization shows gradient norms as a function of delay, $\tau$. These norms can be interpreted as how much learning signal is available for a loss at time $t$ from events at time $t - \tau$ of the past.

For more information, please see our paper, which was accepted as a workshop paper at ICLR 2018. Code for an older version of our paper can also be found here; we hope to release an updated version soon (now in PyTorch).

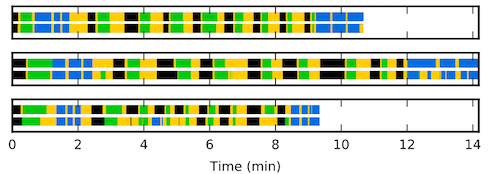

RNNs for Surgical Activity Recognition¶

Here we apply long short-term memory to the task of surgical activity recognition from motion (e.g., x, y, z over time), and in doing so improve state-of-the-art performance in terms of both accuracy and edit distance.

The visualization shows results for three test sequences: best performance (top), median performance (middle), and worst performance (bottom), as measured by accuracy. In all cases, we are recognizing 4 activities over time – suture throw (black), knot tying (blue), grasp pull run suture (green), and intermaneuver segment (yellow) – showing ground truth labels above and predictions below.

For more information, please see our paper, which was accepted as an oral presentation at MICCAI 2016. Code is also available here.

Open-Source Contributions¶

Nowadays nearly all of my code is written using Python, NumPy, and PyTorch. I moved to PyTorch from TensorFlow in 2017, and my experience has resembled Andrej Karpathy's :).

I've made small open-source contributions (code, tests, and/or docs) to TensorFlow, PyTorch, Edward, Pyro, and other projects.

Some of my projects can be found here: GitHub

Teaching¶

I've fortunately had the chance to teach quite a bit at Hopkins. I love teaching, despite its tendency to devour time.

Johns Hopkins University¶

- Co-Instructor for EN.601.382, Machine Learning: Deep Learning Lab. Spring, 2018.

- Co-Instructor for EN.601.482/682, Machine Learning: Deep Learning. Spring, 2018.

- Teaching Assistant for EN.601.475/675, Introduction to Machine Learning. Fall, 2017.

- Instructor for EN.500.111, HEART: Machine Learning for Surgical Workflow Analysis. Fall, 2015.

- Teaching Assistant for EN.600.476/676, Machine Learning: Data to Models. Spring, 2015.

- Co-Instructor for EN.600.120, Intermediate Programming. Spring, 2014.

- Instructor for EN.600.101, MATLAB for Data Analytics. Intersession, 2014.

Tutorials¶

- A Friendly Introduction to Cross-Entropy Loss. Introduces entropy, cross entropy, KL divergence, and discusses connections to likelihood.

- TensorFlow Scan Examples. This is an old tutorial in which we build, train, and evaluate a simple recurrent neural network from scratch. I do not recommend this tutorial. Instead, I recommend switching to PyTorch if at all possible :).